Tutorial 6: Compute and Plot Temperature Anomalies#

Week 1, Day 1, Climate System Overview

Content creators: Sloane Garelick, Julia Kent

Content reviewers: Katrina Dobson, Younkap Nina Duplex, Danika Gupta, Maria Gonzalez, Will Gregory, Nahid Hasan, Paul Heubel, Sherry Mi, Beatriz Cosenza Muralles, Jenna Pearson, Agustina Pesce, Chi Zhang, Ohad Zivan

Content editors: Paul Heubel, Jenna Pearson, Chi Zhang, Ohad Zivan

Production editors: Wesley Banfield, Paul Heubel, Jenna Pearson, Konstantine Tsafatinos, Chi Zhang, Ohad Zivan

Our 2024 Sponsors: CMIP, NFDI4Earth

#

#

Pythia credit: Rose, B. E. J., Kent, J., Tyle, K., Clyne, J., Banihirwe, A., Camron, D., May, R., Grover, M., Ford, R. R., Paul, K., Morley, J., Eroglu, O., Kailyn, L., & Zacharias, A. (2023). Pythia Foundations (Version v2023.05.01) https://zenodo.org/record/8065851

Tutorial Objectives#

Estimated timing of tutorial: 20 minutes

In the previous tutorials, we have explored global climate patterns and processes, focusing on the terrestrial, atmospheric, and oceanic climate systems. We have understood that Earth’s energy budget, primarily controlled by incoming solar radiation, plays a crucial role in shaping Earth’s climate. In addition to these factors, other significant long-term climate forcings can influence global temperatures. To gain insight into these forcings, we need to look into historical temperature data, as it offers a valuable point of comparison for assessing changes in temperature and understanding climatic influences.

Recent and future temperature change is often presented as an anomaly relative to a past climate state or historical period. For example, past and future temperature changes relative to pre-industrial average temperature are a common comparison.

In this tutorial, our objective is to deepen our understanding of these temperature anomalies. We will compute and plot the global temperature anomaly from 2000-01-15 to 2014-12-1, providing us with a clearer perspective on recent climatic changes.

Setup#

# installations ( uncomment and run this cell ONLY when using google colab or kaggle )

#!pip install pythia_datasets cftime nc-time-axis

# imports

import xarray as xr

from pythia_datasets import DATASETS

import matplotlib.pyplot as plt

Install and import feedback gadget#

Show code cell source

# @title Install and import feedback gadget

!pip3 install vibecheck datatops --quiet

from vibecheck import DatatopsContentReviewContainer

def content_review(notebook_section: str):

return DatatopsContentReviewContainer(

"", # No text prompt

notebook_section,

{

"url": "https://pmyvdlilci.execute-api.us-east-1.amazonaws.com/klab",

"name": "comptools_4clim",

"user_key": "l5jpxuee",

},

).render()

feedback_prefix = "W1D1_T6"

Figure Settings#

Show code cell source

# @title Figure Settings

import ipywidgets as widgets # interactive display

%config InlineBackend.figure_format = 'retina'

plt.style.use(

"https://raw.githubusercontent.com/neuromatch/climate-course-content/main/cma.mplstyle"

)

Video 1: Orbital Cycles#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Orbital_Cycles_Video")

If you want to download the slides: https://osf.io/download/tcb2q/

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Orbital_Cycles_Slides")

Section 1: Compute Anomaly#

First, let’s load the same data that we used in the previous tutorial (monthly SST data from CESM2):

filepath = DATASETS.fetch("CESM2_sst_data.nc")

ds = xr.open_dataset(filepath)

ds

/opt/hostedtoolcache/Python/3.11.14/x64/lib/python3.11/site-packages/xarray/conventions.py:440: SerializationWarning: variable 'tos' has multiple fill values {1e+20, 1e+20}, decoding all values to NaN.

new_vars[k] = decode_cf_variable(

<xarray.Dataset> Size: 47MB

Dimensions: (time: 180, d2: 2, lat: 180, lon: 360)

Coordinates:

* time (time) object 1kB 2000-01-15 12:00:00 ... 2014-12-15 12:00:00

* lat (lat) float64 1kB -89.5 -88.5 -87.5 -86.5 ... 86.5 87.5 88.5 89.5

* lon (lon) float64 3kB 0.5 1.5 2.5 3.5 4.5 ... 356.5 357.5 358.5 359.5

Dimensions without coordinates: d2

Data variables:

time_bnds (time, d2) object 3kB ...

lat_bnds (lat, d2) float64 3kB ...

lon_bnds (lon, d2) float64 6kB ...

tos (time, lat, lon) float32 47MB ...

Attributes: (12/45)

Conventions: CF-1.7 CMIP-6.2

activity_id: CMIP

branch_method: standard

branch_time_in_child: 674885.0

branch_time_in_parent: 219000.0

case_id: 972

... ...

sub_experiment_id: none

table_id: Omon

tracking_id: hdl:21.14100/2975ffd3-1d7b-47e3-961a-33f212ea4eb2

variable_id: tos

variant_info: CMIP6 20th century experiments (1850-2014) with C...

variant_label: r11i1p1f1We’ll compute the climatology using xarray’s .groupby() operation to split the SST data by month. Then, we’ll remove this climatology from our original data to find the anomaly:

# group all data by month

gb = ds.tos.groupby("time.month")

# take the mean over time to get monthly averages

tos_clim = gb.mean(dim="time")

# subtract this mean from all data of the same month

tos_anom = gb - tos_clim

tos_anom

<xarray.DataArray 'tos' (time: 180, lat: 180, lon: 360)> Size: 47MB

array([[[ nan, nan, nan, ..., nan,

nan, nan],

[ nan, nan, nan, ..., nan,

nan, nan],

[ nan, nan, nan, ..., nan,

nan, nan],

...,

[-0.01402271, -0.01401687, -0.01401365, ..., -0.01406252,

-0.01404917, -0.01403356],

[-0.01544118, -0.01544476, -0.01545036, ..., -0.0154475 ,

-0.01544321, -0.01544082],

[-0.01638114, -0.01639009, -0.01639998, ..., -0.01635301,

-0.01636147, -0.01637137]],

[[ nan, nan, nan, ..., nan,

nan, nan],

[ nan, nan, nan, ..., nan,

nan, nan],

[ nan, nan, nan, ..., nan,

nan, nan],

...

[ 0.01727939, 0.01713431, 0.01698041, ..., 0.0176847 ,

0.01755834, 0.01742125],

[ 0.0173862 , 0.0172919 , 0.01719594, ..., 0.01766813,

0.01757395, 0.01748013],

[ 0.01693714, 0.01687253, 0.01680517, ..., 0.01709175,

0.0170424 , 0.01699162]],

[[ nan, nan, nan, ..., nan,

nan, nan],

[ nan, nan, nan, ..., nan,

nan, nan],

[ nan, nan, nan, ..., nan,

nan, nan],

...,

[ 0.01506364, 0.01491845, 0.01476014, ..., 0.01545238,

0.0153321 , 0.01520228],

[ 0.0142287 , 0.01412642, 0.01402068, ..., 0.0145216 ,

0.01442552, 0.01432824],

[ 0.01320827, 0.01314461, 0.01307774, ..., 0.0133611 ,

0.0133127 , 0.01326215]]], dtype=float32)

Coordinates:

* time (time) object 1kB 2000-01-15 12:00:00 ... 2014-12-15 12:00:00

* lat (lat) float64 1kB -89.5 -88.5 -87.5 -86.5 ... 86.5 87.5 88.5 89.5

* lon (lon) float64 3kB 0.5 1.5 2.5 3.5 4.5 ... 356.5 357.5 358.5 359.5

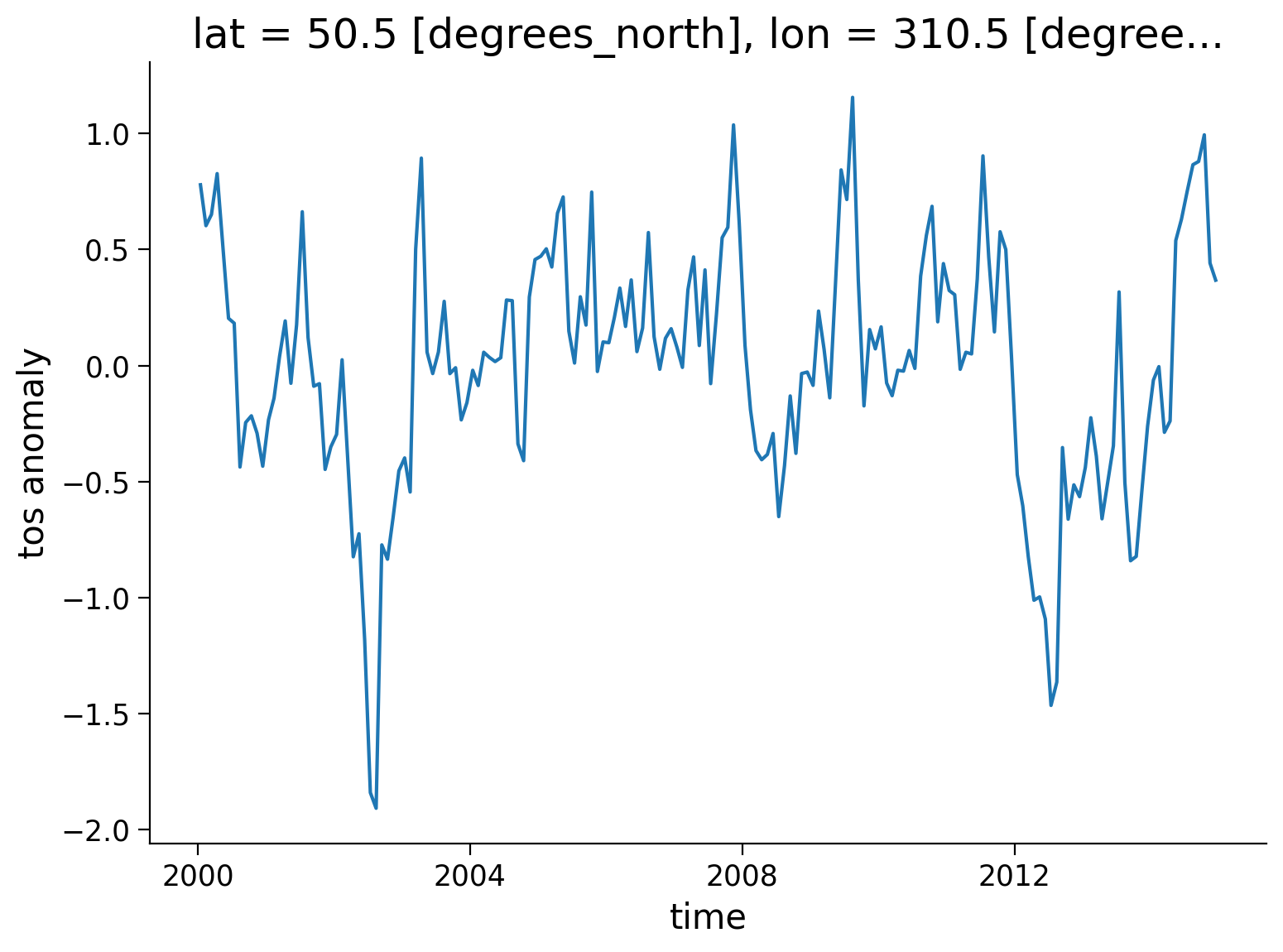

month (time) int64 1kB 1 2 3 4 5 6 7 8 9 10 11 ... 3 4 5 6 7 8 9 10 11 12Let’s try plotting the anomaly of a specific location:

tos_anom.sel(lon=310, lat=50, method="nearest").plot()

plt.ylabel("tos anomaly")

Text(0, 0.5, 'tos anomaly')

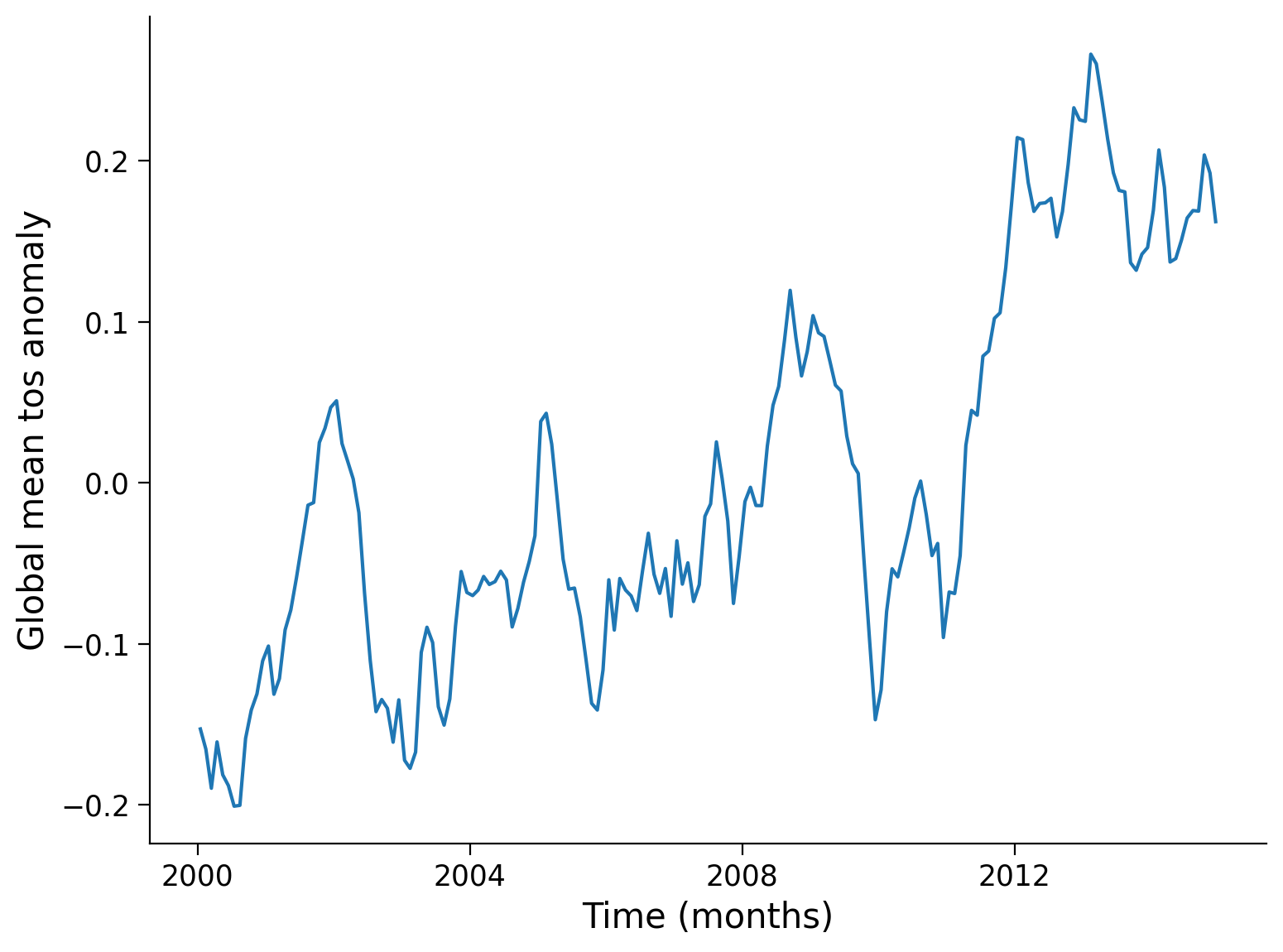

Next, let’s compute and visualize the mean global anomaly over time. We need to specify both lat and lon dimensions in the dim argument to .mean():

unweighted_mean_global_anom = tos_anom.mean(dim=["lat", "lon"])

unweighted_mean_global_anom.plot()

# aesthetics

plt.ylabel("Global mean tos anomaly")

plt.xlabel("Time (months)")

Text(0.5, 0, 'Time (months)')

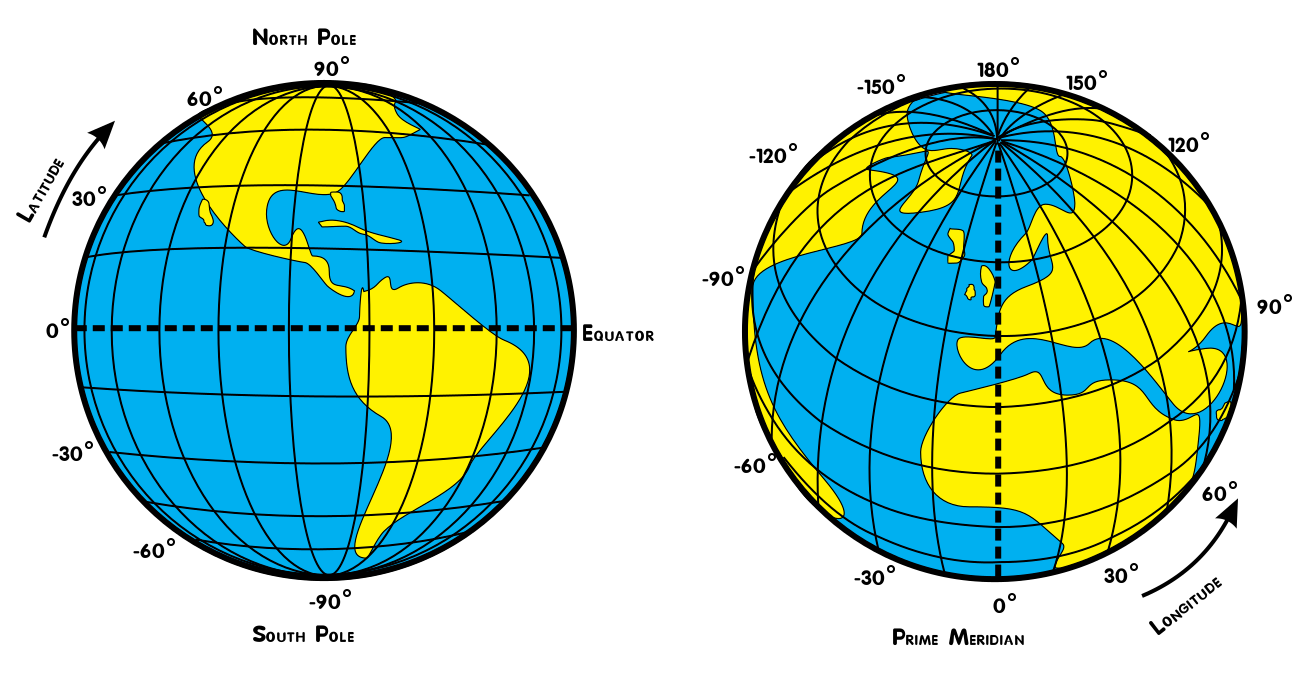

Notice that we called our variable unweighted_mean_global_anom. Next, we are going to compute the weighted_mean_global_anom. Why do we need to weight our data? Grid cells with the same range of degrees latitude and longitude are not necessarily same size. Specifically, grid cells closer to the equator are much larger than those near the poles, as seen in the figure below (Djexplo, 2011, CC-BY).

Therefore, an operation which combines grid cells of different size is not scientifically valid unless each cell is weighted by the size of the grid cell. Xarray has a convenient .weighted() method to accomplish this.

Let’s first load the grid cell area data from another CESM2 dataset that contains the weights for the grid cells:

filepath2 = DATASETS.fetch("CESM2_grid_variables.nc")

areacello = xr.open_dataset(filepath2).areacello

areacello

Downloading file 'CESM2_grid_variables.nc' from 'https://github.com/ProjectPythia/pythia-datasets/raw/main/data/CESM2_grid_variables.nc' to '/home/runner/.cache/pythia-datasets'.

<xarray.DataArray 'areacello' (lat: 180, lon: 360)> Size: 518kB

[64800 values with dtype=float64]

Coordinates:

* lat (lat) float64 1kB -89.5 -88.5 -87.5 -86.5 ... 86.5 87.5 88.5 89.5

* lon (lon) float64 3kB 0.5 1.5 2.5 3.5 4.5 ... 356.5 357.5 358.5 359.5

Attributes: (12/17)

cell_methods: area: sum

comment: TAREA

description: Cell areas for any grid used to report ocean variables an...

frequency: fx

id: areacello

long_name: Grid-Cell Area for Ocean Variables

... ...

time_label: None

time_title: No temporal dimensions ... fixed field

title: Grid-Cell Area for Ocean Variables

type: real

units: m2

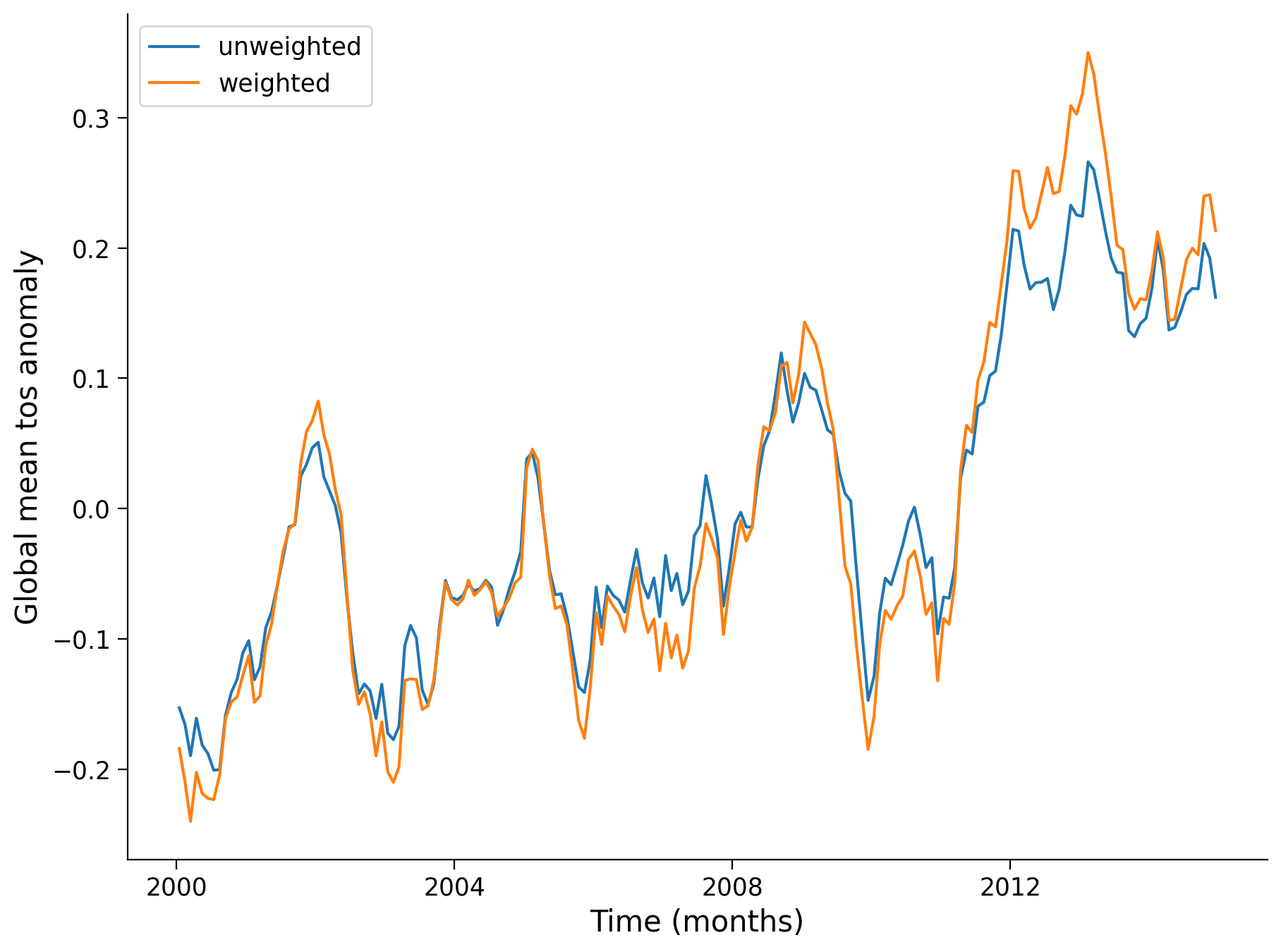

variable_id: areacelloLet’s calculate area-weighted mean global anomaly:

weighted_mean_global_anom = tos_anom.weighted(

areacello).mean(dim=["lat", "lon"])

Let’s plot both unweighted and weighted means:

unweighted_mean_global_anom.plot(size=7)

weighted_mean_global_anom.plot()

plt.legend(["unweighted", "weighted"])

plt.ylabel("Global mean tos anomaly")

plt.xlabel("Time (months)")

Text(0.5, 0, 'Time (months)')

Questions 1: Climate Connection#

What is the significance of calculating area-weighted mean global temperature anomalies when assessing climate change? How are the weighted and unweighted SST means similar and different?

What overall trends do you observe in the global SST mean over this time? How does this magnitude and rate of temperature change compare to past temperature variations on longer timescales (refer back to the figures in the video/slides)?

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Questions_1")

Summary#

In this tutorial, we focused on historical temperature changes. We computed and plotted the global temperature anomaly from 2000 to 2014 and compared a weighted and unweighted time series of the global mean SST anomaly. This helped us enhance our understanding of recent climatic changes and their potential implications for the future.

Resources#

Code and data for this tutorial is based on existing content from Project Pythia.